Material#

Supervised learning using scikit-learn#

Instructor#

Nikhil Bhagwat, PhD is an Academic Associate in the ORIGAMI lab (PI: Dr. JB Poline) at McGill University. He completed his PhD thesis on prognostic applications for Alzheimer’s disease using MR imaging and machine-learning (ML) techniques in the CoBrA Lab (PI: Dr. Mallar Chakravarty) at the University of Toronto. Subsequently, he worked as a researcher at the University of Massachusetts and the Allen Institute. His current research interests include disease staging, subtyping, and prognosis using ML models, along with development of neuroinformatics tools for improving reproducibility and sustainability of computational pipelines.

Objectives#

Define machine-learning nomenclature

Describe basics of the “learning” process

Explain model design choices and performance trade-offs

Introduce model selection and validation frameworks

Explain model performance metrics

Questions you will be able to answer after taking this module:#

Model training - what is under/over-fitting?

Model selection - what is (nested) cross-validation?

Model evaluatation - what are type-1 and type-2 errors?

Materials#

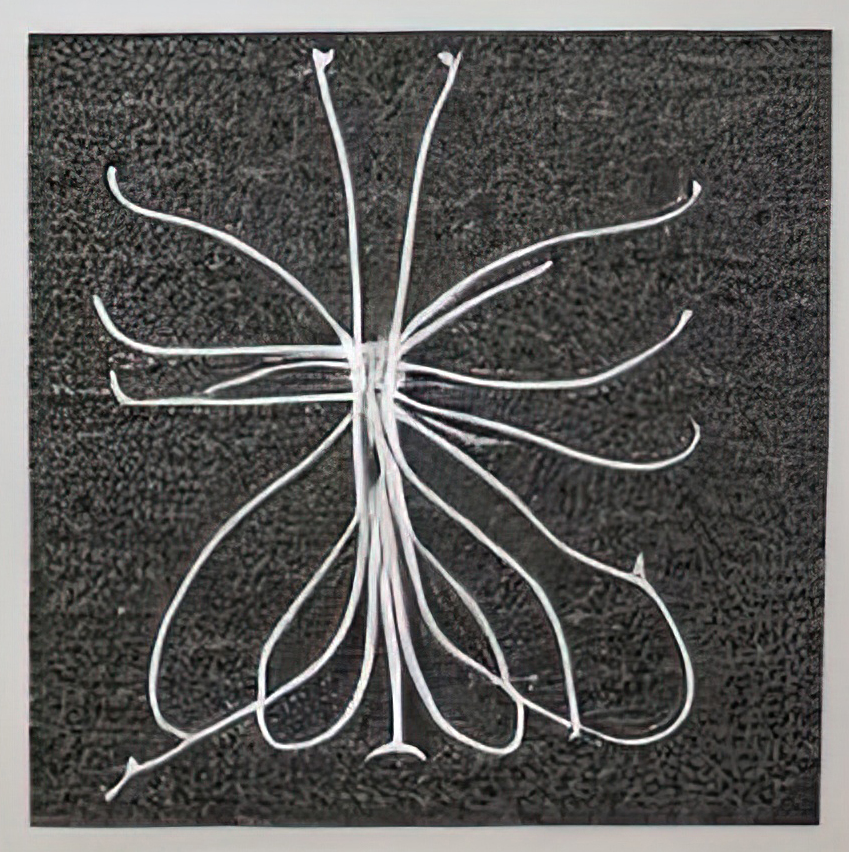

Representational structure in neural time series using calcium imaging and electrophysiology#

Instructors#

Quinn Lee, PhD is a CIHR/FRQ postdoctoral research fellow in the Brandon Lab at McGill University (Montreal, QC, Canada). Prior to joining the Brandon Lab, he completed my PhD at the University of Lethbridge (Lethbridge, AB, Canada) with Drs. Robert Sutherland and Robert McDonald studying how long-term memory is organized at the systems-level in the brain. His current work aims to understand how aspects of experience and memory are represented in neuronal population activity in the rodent hippocampus across protracted experience. To this end, he uses a combination of miniscope calcium imaging in freely moving animals, advanced behavioral tracking, and computational methods to explore questions about neuronal representation and behavior.

Giuseppe P Gava, PhD is a postdoctoral neuroscientist in the Dupret Lab at the MRC BNDU, University of Oxford. He was awarded a PhD from the Centre for Doctoral Training in Neurotechnology at Imperial College London, where he also graduated in Biomedical Engineering. He aims to use concepts from network science, topology and information theory to understand the complex neural circuitry and dynamics that shape memory and cognition.

Objectives#

Data analysis for electrophysiological time series from freely-behaving animals

Data analysis for in vivo calcium imaging time series from freely-behaving animals

Assess the co-firing structure and topology of neuronal networks, comparing them using Riemannian metrics

Compare representation in neural data to theoretical models with representational similarity analysis (RSA) and manifold-based analyses

Materials#

Keynote: What’s the endgame of neuroAI?#

Patrick Mineault, PhD is a neurotechnologist and CEO of xcorr consulting. His career spans academia and industry: he was a data scientist at Google, research scientist at Meta on brain-computer-interfaces, founder of a medtech startup and founding CTO of the educational nonprofit Neuromatch. His research at the intersection of neuroscience and AI has been published in NeurIPS, Neuron, PNAS, J Neurosci and Plos Comp Bio. He is the author of the Good Research Code Handbook and writes a popular computational neuroscience blog, xcorr.net. He obtained his PhD in computational neuroscience at McGill University.

Summary: Neuroscience and AI have a long, intertwined history. Artificial intelligence pioneers looked to the principles of the organization of the brain as inspiration to make intelligent machines. In a surprising reversal, AI is now helping us understand its very source of inspiration: the human brain. Over the next decade, we’ll make ever more precise in silico brain models. As a result, we’ll soon be able to download and use sensory models, on demand, with the same convenience that we can do object recognition or natural language processing.

In this talk, I will take you on a tour of the near future. I will argue that we should use this newfound capability to help improve human health, both for people with neurological disorders and to enhance the well. I discuss enabling technological trends: advances in AI, industrial use cases, AR/VR and BCI. I invite everyone to reflect on the role of innovation and academia to help humans flourish.

Materials#

Introduction to deep learning using Pytorch#

Instructors#

Mohammad Yaghoubi is a PhD student at McGill University. He conducts his research under the supervision of Dr. Mark Brandon at the Integrated Program in Neuroscience. His research focuses on developing statistical and machine-learning based tools to better analyze high-dimensional neuronal and behavioral data.

Thomas Jiralerspong is an undergraduate student in Honours Computer Science at McGill. He is also an undergraduate researcher with Professor Blake Richards, interested in generalization in reinforcement learning and cognitively inspired reinforcement learning models.

Krystal Pan is a Master’s student under supervision by Dr. Blake Richards. She is interested in biologically plausible AI models of the vision system. She is also the Lab Manager of the LiNC Lab.

Objectives#

Understand basic concepts in deep learning

Discover the pytorch interface to build artificial neural networks

Train a basic model using pytorch

Materials#

The training material is adapted from NeuroMatch Academy. Note that videos recorded by Pr. Konrad Kording are included in the google colab resource. The main educational session was purely hands-on support of the participants, and recording of this session will not be made available.

Machine learning in functional MRI using Nilearn#

Instructors#

Yasmin Mzayek obtained her Master’s in Brain and Cognitive Sciences from the University of Amsterdam. During this time she did an internship at the Netherlands Cancer Institute in Amsterdam and worked on analyzing diffusion-weighted imaging data. Then she went to Aix Marseille University to work on pulse sequence programming for diffusion MR spectroscopy as well as processing and analysis of data from this modality. She also worked as scientific programmer and data scientist at the University of Groningen. Currently, she is a research engineer at INRIA working on maintaining the Nilearn Python toolkit.

Hao-Ting Wang, PhD is a IVADO postdoctoral fellow at CRIUGM. Her project focuses on discovery of transdiagnostic brain biomarkers amongst neurodegenerative conditions from multiple open access datasets. Her expertise lies in fMRI data processing, functional connectivity, and data workflow construction. She is also a core developer of Nilearn with a focus on fMRI data processing and feature extraction.

Objectives#

Understand the structure of functional magnetic resonance imaging data.

Generate correlation matrices using fMRI time series (aka “connectomes”).

Visualize brain maps and connectomes.

Train machine learning models to classify subjects by age groups based on brain connectivity.

Materials#

Keynote: Aligning representations in brains and machines#

Elizabeth Dupre, PhD is a Wu Tsai interdisciplinary postdoctoral research fellow at Stanford University, working between Prof. Russ Poldrack and Prof. Scott Linderman. As a psychologist and computational neuroscientist, her work focuses on modeling individual brain activity across a range of cognitive states—and assessing the generalizability of these individualized models—by extending statistical methods for human neuroimaging data analysis. Through her work, Dr DuPre has taken an active role developing tools in the open source Python ecosystem, with a focus on improving the reproducibility of analysis workflows.

Summary: Computational neuroscience is focused on uncovering general organizational principles supporting neural activity and behavior; however, uncovering these principles relies on making appropriate comparisons across individuals. This presents a core technical and conceptual challenge, as individuals differ along nearly every relevant dimension: from the number of neurons supporting computation to the exact computation being performed. Similarly in artificial neural networks, multiple initializations of the same architecture—on the same data—may recruit non-overlapping hidden units, complicating direct comparisons of trained networks.

In this talk, I will introduce techniques for aligning representations in both brains and in machines. I will argue for the importance of considering alignment methods in developing a comprehensive science at the intersection of artificial intelligence and neuroscience that reflects our shared goal of understanding principles of computation. Finally, I will consider current applications and limitations of these techniques, discussing relevant future directions for this area.

Materials#

Model selection and validation#

Instructor#

Jérôme Dockès is a post-doc in the ORIGAMI lab (PI: Dr. JB Poline) at McGill University. He completed his PhD thesis on statistical methods for large-scale meta-analysis of neuroimaging studies in the Parietal lab at INRIA. His current work focuses on tools and resources to facilitate text-mining and meta-analysis of the neuroimaging literature.

Objectives#

Understand how to evaluate the performance of machine learning models.

Learn about hyperparameters and model selection.

Learn about pitfalls when validating machine learning models and how to easily avoid them using scikit-learn.

Materials#

Machine learning on electro- and magneto-encephalography (EEG/MEG)#

Instructor#

Alex Gramfort is a research scientist at Meta in Paris, France. Before joining Meta, he was a team leader and PI at Inria. His work focusses on machine learning for brain signals. His main and active opensource contributions are to scikit-learn, MNE-Python and braindecode.

Hubert Banville is a Research Scientist at InteraXon (maker of the Muse headband). He completed a PhD in the Parietal team at Inria, Université Paris-Saclay, under the supervision of Alexandre Gramfort and Denis Engemann. This work was done jointly at InteraXon. His research focuses on machine learning for processing EEG and other biosignals.

Objectives#

Understand the structure of electro- and magneto-encephalography signals

Preprocess and visualize MEG/EEG data

Learn about the ML techniques to decode evoked and induced MEG/EEG activity

Train machine learning models on MEG data using MNE-python and PyTorch

Materials#

Brain decoding#

Within this session, we will go through the basics of running/applying decoding models

to fMRI data. More precisely, we will explore how we can utilize different decoding models

to estimate/predict what an agent is perceiving or doing based on recordings of responses/activity.

Given the time restrictions, we will focus on biological agents, ie human participants, and thus brain responses obtained from fMRI.

Instructors#

Peer Herholz is a research affiliate at The Neuro (Montreal Neurological Institute-Hospital)/ORIGAMI lab (PI: Dr. JB Poline) at McGill University and the McGovern Institute for Brain Research/Senseable Intelligence Group (PI: Satra Ghosh). He obtained his PhD in cognitive & computational neuroscience, focusing on auditory processing in humans and machines. Afterwards, he conducted multiple postdocs further working at the intersection between neuroscience & artificial intelligence, as well as expanding the integration of open & reproducible scientific practices therein. Currently, he is working on research questions related to generalization in biological and artificial neural networks, comparing respective representations and their underlying computation.

Shima Rastegarnia obtained her Master’s in Computer Science from Montreal university. She completed her Master’s at the CRUIGM CNeuroMod project under the supervision of Dr. Pierre Bellec. Her research work focuses on implementing machine learning and deep learning models for brain decoding using functional magnetic resonance imaging datasets.

Alexis Thual is a PhD student working at Neurospin CEA and Inria (France). His work focuses on building open-source tools to efficiently compute comparisons between brains although their anatomy and activation patterns are very different across individuals. In particular, his methods can be used to draw comparisons between human and non-human primates. He is also a core-developer of Nilearn.

Bertrand Thirion is senior researcher in the MIND team, part of Inria research institute, Saclay, France, that develops statistics and machine learning techniques for brain imaging. He contributes both algorithms and software, with a special focus on functional neuroimaging applications. He is involved in the Neurospin, CEA neuroimaging center, one of the leading high-field MRI for brain imaging places. From 2018 to 2021, Bertrand Thirion has been the head of the DATAIA Institute that federates research on AI, data science and their societal impact in Paris-Saclay University. In 2020, he has recently been appointed as member of the expert committee in charge of advising the government during the Covid-19 pandemic. In 2021, he has become the Head of science (délégué scientifique) of the Inria Saclay-Île-de-France research center. Bertrand Thirion is PI of the Karaib AI Chair of the Individual Brain Charting project.

Objectives 📍#

Understand the core aspects & principles of

brain decodingincluding

datasetrequirements

Explore different

decoding modelsthat can be applied tobrain dataSupport Vector Machines(SVMs)Multilayer Perceptrons(MLPs)Graph-Convolutional Neural Networks(GCNs)

Get first hands-on experience using the respective

pythonlibraries

Questions you will be able to answer after taking this module 🎯#

What does

brain decodingentail?What kind of

dataandinformationis required forbrain decodinganalyses?What are examples of suitable

decoding modelsand what do they comprise?

Materials#

Brain encoding#

Instructors#

Isil Poyraz Bilgin is a Postdoctoral research fellow at the CRUIGM CNeuroMod project and is supervised by Prof. Leila Wehbe and Prof. Pierre Bellec. Her work focuses on implementing encoding models to predict brain activities of processing the natural language using representations extracted from natural language models. Her main interest lies in developing optimizations to improve the predictive performance of the neural networks of language models with well-defined features of brain dynamics in processing naturalistic stimuli. She holds a bachelor’s degree in pure mathematics and Ph.D. in Cybernetics. Her thesis work focuses on dynamic functional connectivity of the emergence of the neural representation of the novel semantic concepts in the human brain using simultaneous EEG and fMRI.

Alexandre Pasquiou is a PhD student working at INRIA MIND team, with Christophe Pallier and Bertrand Thirion. His work focuses on understanding how the brain processes language. Being an engineer from CentraleSupélec (specialized in applied mathematics), he uses machine learning models to dive deeply into the neural bases of language comprehension relying on both encoding and decoding experimental paradigms. Some of his work studied semantic and syntactic processing, the integration of contextual information as well as the pitfalls of encoding models that leveraged features derived from neural language models.

Objectives#

In the first part of the session we will focus on

Understanding the foundations of

brain endecodingwith;Preparation of the

fMRI and stimuli datasetBuilding encoding models using

ridge regressionto extract direct representation of visual stimuliEvaluation of the model performance using

cross validationVisualisation of the encoding scores on

cortex

In the second part of the session we will dive into the predictive brain encoding models to

Understand the utilization of the high-dimensional stimuli features in

brain encodingmodelsExplore

brain encodingof processing the naturalistic stimuli (movie watching) by utilizingTextual featuresextracted with pretrainedBERTlanguage modelAudio featuresextracted withMFCC (Mel Frequency Cepstral Coefficients)techniqueAudio featuresextracted withResNet-50model

in ridge regression models to predict the brain representations.

Visualize the prediction accuracy in the cortex brain maps

Questions you will be able to answer after taking this module 🎯#

What are the constituents of the

brain encoding?How

deep learningmodels could utilize rich features of thenaturalistic stimuliin the analysis ofbrain encoding?How the trained

brain encodingmodels could generalize to new brain data for different feature spaces?